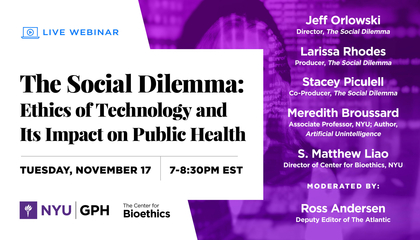

We tweet, we like, and we share — but what are the consequences of our dependence on social media? GPH recently hosted a webinar entitled “The Social Dilemma: Ethics of Technology and Its Impact on Public Health” to address this question.

Based on the Netflix documentary “The Social Dilemma,” the thought-provoking discussion came at a time in which so much of our daily lives has gone virtual due to the ongoing COVID-19 pandemic. The webinar focused on how social media and technology are shaping our lives and mental health, as well as the ethical implications of the ever-present force of technology.

Prominent creators and thought leaders in ethics and technology included: Jeff Orlowski, director, The Social Dilemma; Stacey Piculell, co-producer, The Social Dilemma; Larissa Rhodes, producer, The Social Dilemma; Meredith Broussard, associate professor at NYU and author of Artificial Unintelligence; and S. Matthew Liao, director of the Center for Bioethics at NYU. The discussion was moderated by Ross Andersen, deputy editor of The Atlantic.

The event began with a conversation on the genesis of the film and how the filmmakers decided to address big questions about our society in fresh ways. The theme of existential threats to humanity is ever-present when discussing both technology and climate change, as well as how they are inextricably linked.

A question was posed that urged the panelists to connect social media and technology to public health. Mental health and behavioral addiction, along with political polarization and its impact on public health precautions such as wearing masks, were all mentioned as key public health concerns that are exacerbated by technology.

A discussion followed on the past, present, and future responsibilities of “tech titans” in solving the problems that have arisen due to the intentional design of social media to exploit human psychology. The panelists all agreed that tech companies have a moral obligation to be proactive in thinking about the consequences of their actions, and how their platforms have the power to make impacts on a global scale.

Similar to climate change, it was noted that individual choices regarding social media can only do so much, pointing to the need for systemic remedies. Orlowski highlighted that in both cases, a product was built that people thought would be beneficial for society, but the unintended consequences were not taken into account, leaving us with Pandora’s opened box. Broussard called for individual action plus regulation on the state, national, and global levels to ensure that tech companies are compliant with the law, even in digital spaces.

A discussion ensued on technology’s role in promoting political conspiracies, the dangers of rapidly spreading fake news, and how the foundation of a functional society is a shared understanding of what is true. Algorithms purposely present users with content related to what they have viewed previously or may be interested in now, which leads to the drowning out of opposing viewpoints.

The issue of echo chambers and conspiracy-mongering is transpartisan, leading to people on both sides of the aisle to view completely different sets of information. Orlowski and Liao emphasized the importance of a shared sense of truth in order to reckon with problems that technology creates.

Finally, the panelists discussed alternative versions of these technologies that would simultaneously preserve their utility and reduce societal harms. Liao stressed the need for a perspective in which human rights are not only protected in the digital realm, but actively promoted. Broussard focused on the difference between popular and good, and noted that a world beyond social media and tech dominance is possible, though it may not be as profitable.

Orlowski concluded by highlighting that the current business models of major social media companies are based on the sale of advertisements, and he pushed the audience to imagine what it would mean to create deeper social relations with close friends and family through socially focused technology, rather than the current form driven by AI and profits.

Follow-up questions from the audience included how to address the tension between privacy concerns and the need for technological surveillance in the context of contact tracing; how to curb the spread of misinformation; and how to regulate the technology industry with bipartisan support.